Accelerate your most demanding HPC and hyperscale data center workloads with NVIDIA® Tesla®GPUs. Data scientists and researchers can now parse petabytes of data orders of magnitude faster than they could using traditional CPUs, in applications ranging from energy exploration to deep learning. Tesla accelerators also deliver the horsepower needed to run bigger simulations faster than ever before. Plus, Tesla delivers the highest performance and user density for virtual desktops, applications, and workstations.

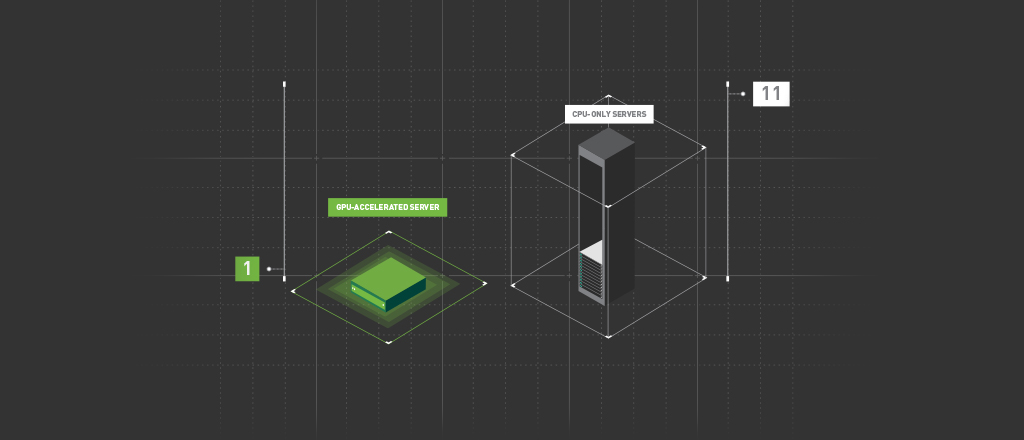

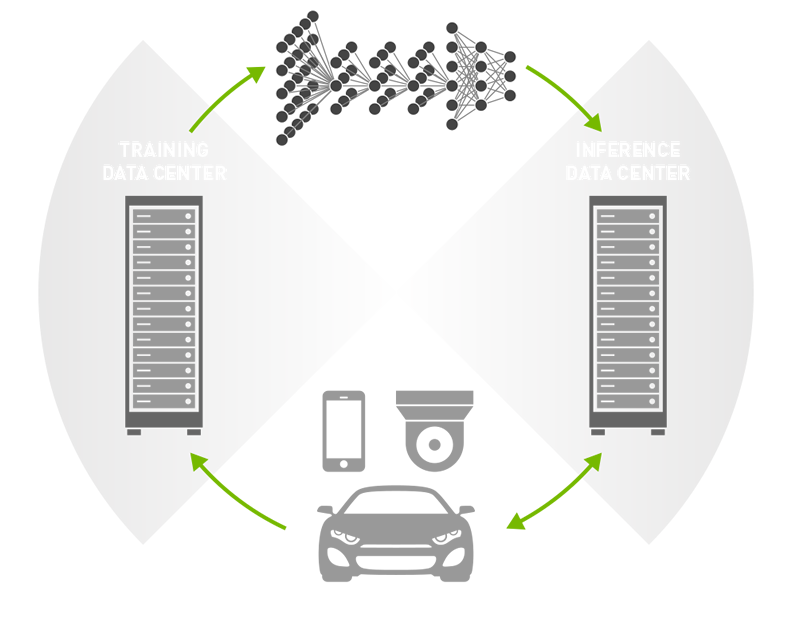

NVIDIA partners offer a wide array of cutting-edge servers capable of diverse AI, HPC, and accelerated computing workloads. To promote the optimal server for each workload, NVIDIA has introduced GPU-Accelerated Server Platforms, which recommends ideal classes of servers for various Training (HGX-T), Inference (HGX-I), and Supercomputing (SCX) applications.

Platforms align the entire data center server ecosystem and ensure that, when a customer selects a specific server platform that matches their accelerated computing application, they’ll achieve the industry’s best performance.

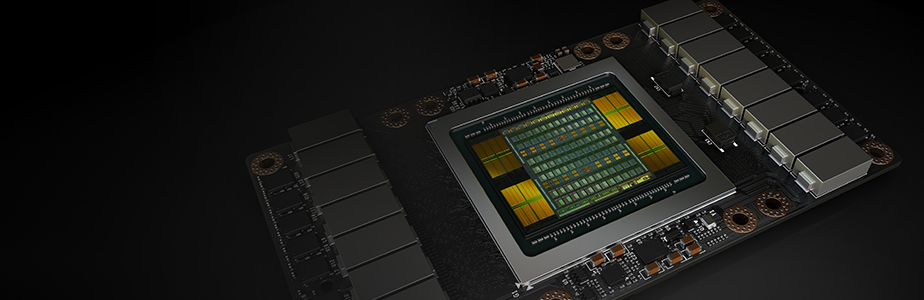

The Tesla V100 has 125 teraflops of inference performance per GPU. A single server with eight Tesla V100s can produce a petaflop of compute.

Tesla P100 for PCIe enables mixed-workload HPC data centres to realise a dramatic jump in throughput while saving money

The Tesla P40 offers great inference performance, INT8 precision and 24GB of onboard memory for an amazing user experience.

HPC data centers need to support the ever-growing computing demands of scientists and researchers while staying within a tight budget. The old approach of deploying lots of commodity compute nodes substantially increases costs without proportionally increasing data center performance. With over 550 HPC applications accelerated—including all of the top 15 —all HPC customers can now get a dramatic throughput boost for their workloads, while also saving money.